US higher education is facing a very tumultuous period driven by changes in the perception of the value of college, funding challenges, student aid challenges, demographic changes, a shift towards skills-based instead of degree-based hiring, and changes in student expectations that started before the pandemic and were impacted by it.

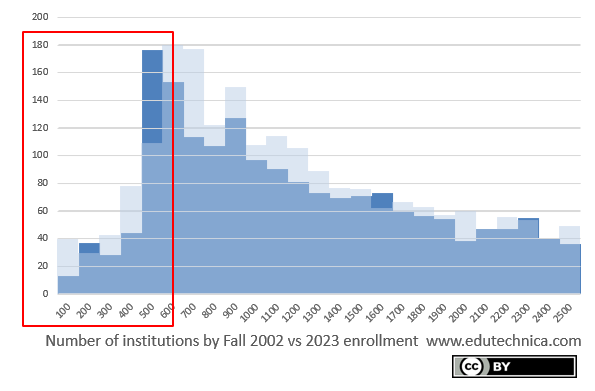

Since we began tracking Learning Management System (LMS) usage in US higher education over a decade ago, we’ve noted over 250 institutions that have closed or consolidated. This trend seems to be accelerating, and combined with year-to-year shifts in student enrollments, it’s beginning to reflect in the trends seen in our data.

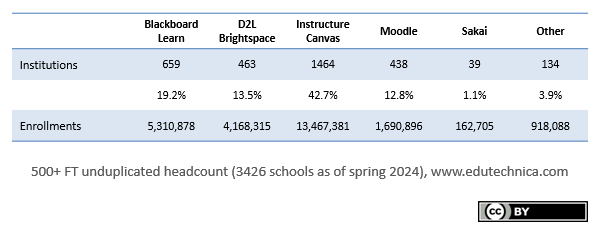

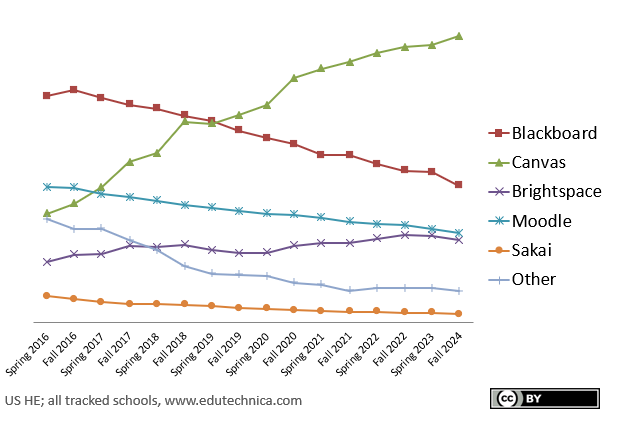

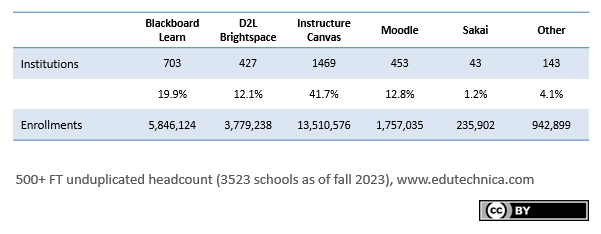

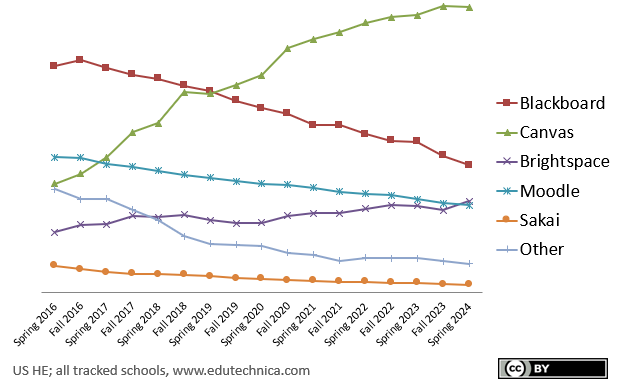

This spring, we note two developments of significance. First, D2L Brightspace has taken over Moodle as the third-most popular LMS in US higher education. D2L has been winning new Brightspace customers at an accelerating pace. Moodle’s losses come from a mix of LMS switches and also from an uptick in smaller schools that are closing or consolidating. Second, Instructure no longer appears to be winning every potential new customer at the pace it once was. This, combined with school closures and mergers within its existing customer base, has slowed Instructure’s US higher education growth.

For many years, we have consistently tracked institutions having more than 500 students – a number we determined indicates a strong likelihood that a school would have and use a LMS. It’s also one indicator of the financial sustainability of an institution. As with every update, we’ve updated our data to reflect the latest IPEDS enrollment data. We’ve also begun to remove the growing list of colleges and universities that have closed. The number of active, unique institutions having more than 500 students has again decreased. This trend, combined with the coming enrollment cliff, means that the next several years will not only be increasingly challenging for higher education institutions themselves but also for the technology vendors that sell solutions to them.